Working with huge CSV files

I was working with 'Efficient IP IPAM' and needed to verify some data for a certain subnet and the host entries contained therein. I decided to try and export the data somehow from the system to be able to work with it locally. Working directly on the Web GUI is not really an option, as you would never get a good overview of multiple entries. I quickly realized that the export option i would have to use is the RAW data option. OK fine, let's try it out.

It produced a CSV file containing 873(!) unique header columns! This database has been extended and modified many times over the years and all of the custom attributes added has really made this grow over time.

This file is difficult to handle in, for example Excel, or even to make sense of what the export file contains.

To make this data more digestible, I usually start with something like this quick bash snippet like the one below:

cat export.csv | head -n 1 | sed s/','/\\n/gShow CSV headers and insert a newline for each header value

This gives you the header values as below:

ip

hostname

...

custom_attribute1

custom_attribute2

aruba_serial

aruba_mac

lcm_device_type

In my case i was only interested in some generic ip, hostname and custom attributes containing the word 'aruba' or 'lcm'.

All of this be easily handled in pure bash, but I like to use the Python library pandas for most of my CSV needs. It's a bit more complicated but it gives you the flexibility to connect different types of output formatters (xlsx, pdf, csv...), and by using the streaming functions of pandas or different parser engines you will be able to handle really large amounts of data and leverage multiple cores of your CPU to speed up the processing if needed.

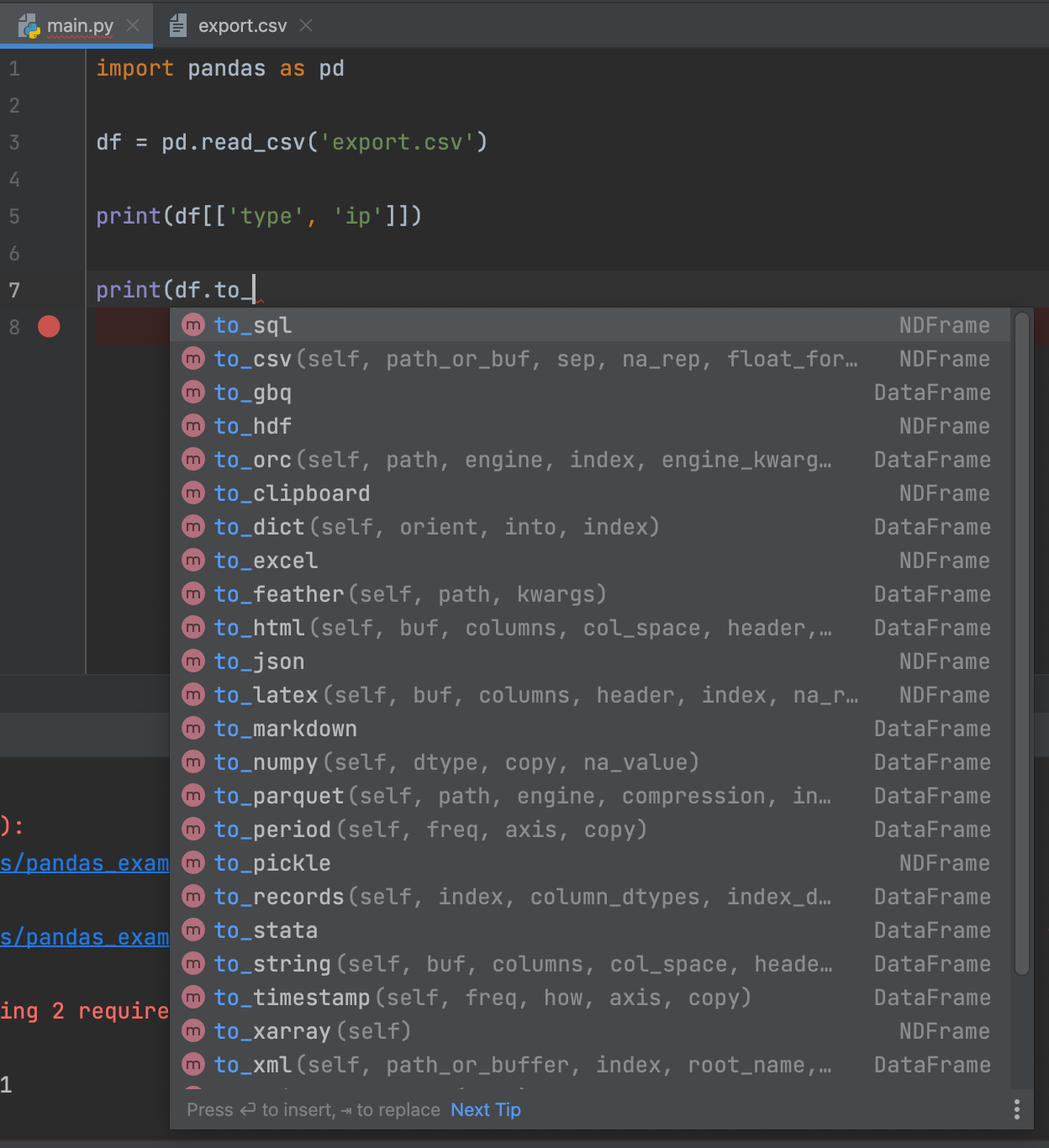

These are some default output formatters that are supported in Pandas 2.2.1:

This is a simplified version of the script:

from tabulate import tabulate

import pandas as pd

df = pd.read_csv('export.csv')

print(df[['hostname', 'ip', 'aruba', 'aruba_serial', 'aruba_mac']].to_markdown(index=False))

Output as Markdown, only selecting the necessary columns

This is how the markdown looks from the above script:

| hostname | ip | aruba | aruba_serial | aruba_mac |

|:-----------|:---------|:--------|:---------------|:------------------|

| switch1 | 10.0.0.1 | y | X213HDJNC | EC-A8-33-89-52-D7 |

| switch2 | 10.0.0.2 | y | X2134NUIN | BE-FB-EA-D0-BD-98 |

And this is how it looks rendered on a webpage that supports Markdown syntax:

| hostname | ip | aruba | aruba_serial | aruba_mac |

|---|---|---|---|---|

| switch1 | 10.0.0.1 | y | X213HDJNC | EC-A8-33-89-52-D7 |

| switch2 | 10.0.0.2 | y | X2134NUIN | BE-FB-EA-D0-BD-98 |

The input file only contains some example data that I made up for this post, but with this, I would like to show how powerful the pandas library can be when working with different types of datasets.

I used this for duplicate detection of serial numbers or MAC addresses in stacked switches, and since the IPAM was used as a source of truth for other integration components, this data had to be accurate.

Each time I use this library, I learn something new from it, and most importantly, save a lot of time.